In this second part of setting up a WebLogic cluster we will continue where we left of in part one and complete our cluster installation. We will also do some monitoring on the cluster setup to ensure that is configured and working properly.

Main Theme

Just to recap what we 've done so far:

1. we have installed WebLogic on both physical machines that make up the cluster,

2. we have created a WebLogic Domain in one of the physical machines, and

3. we have configured the domain: we created two WebLogic Managed Servers and two WebLogic Machines, we assigned the Managed Servers to WebLogic Machines and verified that the node manager running on the physical machines is reachable.

We will complete the setup by following these steps:

4. Copy the domain file structure to the other physical machine and enroll it to the WebLogic Domain

5. Ensure that both WebLogic Machines are operational

6. Create and configure the WebLogic Cluster

7. Ensure that the Cluster is operational

Let's get on with it!

4. Copy the domain file structure to the other physical machine and enroll it to the WebLogic Domain

WebLogic comes with a pack utility that allows you to pack a domain and move it from one place to another. We will instead use plain old Linux tar and gzip to pack our WebLogic domain directory file structure and move it from one physical machine - the one that we have done steps 2 and 3 so far - to the other. Before doing so, we will shutdown the domain and node manager if they are still running on the first machine. Once the packing is done, we will re-start them.

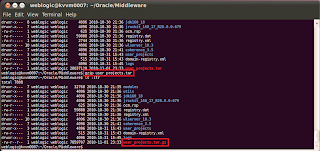

To shutdown the domain, simply press Ctrl-C within the console running the domain. Alternatively, you could shutdown the domain by shutting down the Administration Server using the Administration Console. Once the domain is shutdown, go to the Middleware Home directory and issue the following tar command to pack the domain directory file structure and contents, i.e. the user_projects directory: tar cvf user_projects.tar user_projects

When the tar command is done, zip the archive by typing: gzip user_projects.tar. The file that needs to be moved and unpacked on the other machine is called user_projects.tar.gz

The compressed archive of the user_projects domain file structure should be moved to the Middleware Home on the other physical machine - remember that the Middleware Home directories on both physical machines should be exactly the same - and uncompress by typing gunzip user_projects.tar.gz

Finally extract the archive by typing tar xvf user_projects.tar. This will create the user_projects directory structure on the second physical machine.

Now you need to re-start the domain - on the first (original) machine - and use the WebLogic Scripting Tool - on the second machine - to enroll the domain structure - the one we just extracted - to the WebLogic Domain. So, after making sure that the domain is started and is in RUNNING state, issue the following commands on the second machine - the one we are now setting up - to enroll it to the WebLogic Domain:

Start the WebLogic Scripting Tool by changing to the wlserver_10.3/common/bin directory and typing wlst. On the wlst prompt issue the following command to connect to the WebLogic Domain running on the other machine: connect('weblogic','weblogic1','t3://192.168.1.106:7001').

Verify that the connection was successful and then issue the following command to enroll this machine to the WebLogic Domain:

nmEnroll('/home/weblogic/Oracle/Middleware/user_projects/domains/clusteredDomain', '/home/weblogic/Oracle/Middleware/wlserver_10.3/common/nodemanager')

Verify that the machine was successfully enrolled into the Domain.

5. Ensure that both WebLogic Machines are operational

Now that the second machine was successfully enrolled into the WebLogic Domain, we can start the node manager on the second machine and verify that it is Reachable by the WebLogic Machine. We have already verified that the node manager on the first machine is Reachable back in step 3.

You can start the node manager by running the startNodeManager.sh script in the wlserver_10.3/server/bin directory. Again, ensure that the node manager is not started in SSL mode by setting the SecureListener parameter to false in the nodemanager.properties configuration file in wlserver_10.3/common/nodemanager. Note that nodemanager.properties might not exist until you first run startNodeManager.sh.

Now, with the node managers running on both physical machines return to the Administration Console and verify that the node managers are Reachable for both Machines.

6. Create and configure the WebLogic Cluster

To create a WebLogic Cluster use the Administration Console and via the Domain Structure tree navigate to Environment and then Clusters. On the Summary of Clusters table click the New button to create a new WebLogic Cluster.

On the Create a New Cluster page enter the Name of the Cluster, select Unicast for the Messaging Mode and click OK.

Ensure that the WebLogic Cluster is created successfully by observing the messages on the top Messages area and the new Cluster appearing in the Clusters table. Now, click on the newly created Cluster to go to the Settings for Cluster page. On the Configuration General tab on the Cluster Address field enter the IP addresses of the physical machines separated by comma(s) supplying 7003 as the cluster listen port, e.g. 192.168.1.106:7003,192.168.1.107:7003 in this example. Click Save to save the changes.

While still on the Settings for Cluster page, go to the Configuration Servers tab and click Add on the Servers table to assign the Managed Servers to the Cluster.

On the Add a Server to Cluster page select the Managed Servers - one at a time - to add to the Cluster using the Select a server drop down and click Finish.

Repeat this step for both Managed Servers. In the end both Managed Servers should be shown on the Servers table for the Cluster.

7. Ensure that the Cluster is operational

Now that the Cluster is setup we can start the Managed Servers and do some monitoring on the Cluster to ensure its proper operation. You can start the Managed Servers either by running the startManagedWebLogic.sh script in the user_projects/domains/clusteredDomain/bin directory - for each physical machine - or from within the Administration Console. We will use the Administration Console to do so, which will at the same time validate our setup. So, go to the Summary of Servers page - via the Domain Structure tree by clicking on Environment and then Servers - and click on the Control tab. Click on the checkboxes to select both Managed Servers - notice that they are both in SHUTDOWN state - and click the Start button to start them.

The Administration Console will delegate the start-up process to the node managers running on both machines. Each node manager will start the Managed Server assigned to the Machine controlled by the node manager. The process might take a minute or so to complete and once done both Managed Servers should be displayed on the Servers table with a RUNNING state. The Status of Last Action should be TASK COMPLETED. You will need to periodically refresh the page to see the final start-up status.

Now with both Managed Servers running, let's take a look at our Cluster status. Return to the Summary of Clusters page - via Environment and then Clusters on the navigation tree - and click on the Cluster in the Clusters table. Then click on the Monitoring tab and observe the Cluster Server status on the Summary tab.

Click on the Health and Failover tabs to see other monitoring information and status.

This concludes our Cluster setup.

Conclusion

In this two-part post we went through a basic two physical machine WebLogic cluster setup and configuration. Following the seven-step-process outlined on part one of this post, we first installed WebLogic on both machines (step 1), we created and initially configured the domain on one of the machines (steps 2 and 3), we copied the domain to the other machine (step 4), we verified that both machines are accessible by the domain (step 5), we created the WebLogic cluster (step 6) and finally verified that the cluster is operational (step 7).

Until then keep on JDeveloping!

|  |

thanks a lot...

ReplyDeleteThanks a lot. This really helped me understand a weblogic clustered environment installation

ReplyDeletePrasad.

thanks a lot....for best explaination

ReplyDeleteYou are a rockstar brother! ust..Just worked perfectly. There is a zero error of margin in your explanation and execution.

ReplyDeleteKeep up the good work

Hi Nick,

ReplyDeleteJust want to Thank you for your amazing tutorial on Weblogic Clustering, that was an awesome work.I have few clarifications on the clustering

1) do i need to start domain servers on both the physical machines or just the domain server on the 1 physical machine would be ok?

2) Could you please help me in learning weblogic clustering

Thanks

Hi,

DeleteNo, you only have to start the admin server on one machine. I am be no means an expert on this either, but the best way to get going is to start by looking at the Fusion Middleware High Availability Guide available by Oracle. Take a look at this link, although I am not sure this the latest version of the document: http://docs.oracle.com/cd/E23943_01/core.1111/e10106/toc.htm

Hi nick,

ReplyDeleteI have checked Health and Failover for self setup clustering, and it's same as you mention in snap.

can u suggest me how to test clustering with some sample servlet deployment on cluster ?

You would have to test using the same browser session that your deployed application works without any problems when bring one of the servers down. Take a look at this post: http://www.techpaste.com/2012/05/check-failover-mechanism-cluster-environments-weblogic/

ReplyDelete2014, still useful... Thanks

ReplyDeleteThanks, very useful step-by-step guide.

ReplyDelete2015 still useful too very thank you

ReplyDeleteDoes the cluster listen port(7003) has to be the same as the manage server listen port (7003)

ReplyDeleteWhat will be the URL for the cluster and managed servers?

ReplyDeletehttp://cluster1:7003/...

http://ManagedServer1:7003/...

http://ManagedServer2:7003/...

You don't directly access the cluster. You usually use a front end load balancer, such as OHS or Apache HTTP server configured with the WebLogic plugin to load-balance the user requests.

DeleteHi Nick,

ReplyDeleteThank you for this. But im having problem when i was starting the managed servers.

When i tried starting it, the status was failed not restartable. And the stack trace looks like this:

weblogic.management.ManagementException: Unable to obtain lock on C:\oracle\Middleware\user_projects\domains\PEMC_Domain\servers\ODI\FS Node 1\tmp\ODI\FS Node 1.lok. Server may already be running

at weblogic.management.internal.ServerLocks.getServerLock(ServerLocks.java:206)

at weblogic.management.internal.ServerLocks.getServerLock(ServerLocks.java:67)

at weblogic.management.internal.DomainDirectoryService.start(DomainDirectoryService.java:74)

at weblogic.t3.srvr.ServerServicesManager.startService(ServerServicesManager.java:461)

at weblogic.t3.srvr.ServerServicesManager.startInStandbyState(ServerServicesManager.java:166)

at weblogic.t3.srvr.T3Srvr.initializeStandby(T3Srvr.java:882)

at weblogic.t3.srvr.T3Srvr.startup(T3Srvr.java:572)

at weblogic.t3.srvr.T3Srvr.run(T3Srvr.java:469)

at weblogic.Server.main(Server.java:71)

Please help. Thank you!

Delete the C:\oracle\Middleware\user_projects\domains\PEMC_Domain\servers\ODI\FS Node 1\tmp\ODI\FS Node 1.lok lock file and restart WebLogic. You can also try deleting the complete tmp folder and restarting WebLogic.

Delete